Bitcoin and the Quantum Problem – Part II: The Quantum Supremacy

Disclosure: I hold long positions in QTUM (quantum ETF) and Bitcoin (and other quantum-vulnerable digital assets). CIV is an investor in Project Eleven. CIV holds a long position in Bitcoin and other digital assets.

This is Part II in a 3-part series on Bitcoin and Quantum Computing. Find part one here:

Introduction

Most of you are familiar with the concept of expected value. Simply put, it’s the odds of something happening times the goodness or badness of that thing, if it does happen. This kind of logic is how people get extremely concerned about things like the emergence of a misaligned superintelligence. Even if the odds of a powerful malevolent AI are very low, the possible damage (the extinction of all sentient life, perhaps) is so extraordinarily high that we can assign a highly negative expected value to the overall scenario. Thus, it potentially outweighs in importance near term but more prosaic concerns.

There’s actually an argument to be made that since the damage from a misaligned superintelligence is potentially infinite (from a human perspective), any nonzero probability means we should expend an enormous amount of present-day resources to make sure that outcome doesn’t come to pass.

In the real world, though, we don’t spend all of our time worrying about how to prevent unlikely-but-very-bad things like misaligned AGI, gamma ray bursts, local supernovae, or wayward meteors.1 This might be due to a quirk in human intuition whereby we underrate catastrophic or existential risks. Most humans don’t go about their days relying on consequentialist calculations to determine how they should spend their time and resources. Expected value-based reasoning makes most sense when probabilities and payoffs are known or guessable, like in finite games like poker.

If you’ll indulge me, I’ll posit in this piece that the expected value of the emergence of a cryptographically relevant quantum computer (CRQC) is sufficiently negative for Bitcoin that it should motivate us to take action today. I’ll first explain that the “payoff” of a CRQC on Bitcoin is highly negative, then explain why I think2 we have enough data to assess that the odds are sufficiently high as to worry us, today.

And I want to add a note on my credentials and the purpose of this piece. I’m not a mathematician or physicist, I barely passed statistics in grad school, and I actually quit economics in undergrad because I hated the math so much. My understanding of quantum physics is that of an educated layperson who has read a few books about it and talked to a few experts. I don’t claim in this piece to have any specific insight regarding the nature of quantum computing or its impact on cryptography at all. All I am doing is packaging up publicly available data and presenting it in a way that’s intelligible to the average Bitcoin holder. I am an information retailer, not a wholesaler. I am not claiming to be good at physics or cryptography. My skill, to the extent I have one, is applying an investor’s mindset to the narratives and data swirling around and making risk-based assessments. That’s where I perceive a gap in the discourse and that’s the point of this series.

With that said, let’s investigate what a quantum computer is, and how it breaks the discrete log assumption that we learned about in Part I.

What is quantum computing, again?

You sometimes hear people describe QC with some version of the following: “A quantum computer spins up parallel universes, evaluates every possibility simultaneously, and then picks the right answer from the multiverse.” In a crypto context, you might imagine a QC is using Shor to “try every private key in many different universes, do the computation out there, and deliver the correct result in our universe.”

But that’s not what’s going on.

A quantum computer doesn’t “run really fast” or “outsource computation to other universes”. It’s a machine that relies on the rules of quantum mechanics instead of the rules of classic probability. In other words, QC is what happens when you use the universe as it actually is, rather than pretending it’s classical (which is what ordinary computers do).

We know that the universe is quantum. As in, quantum mechanics is the right theory to describe the behavior of subatomic particles, and it’s been empirically proven.

Computing, historically, is “classical”. That means a system has definite properties at all times. A bit is 1 or 0. Measurement simply reveals the state of the system.

The actual world is quantum. In the quantum world, systems don’t sit in neat, definite states. They exist in superpositions: combinations of possibilities with complex amplitudes attached. A “quantum bit” (qubit) isn’t 0 or 1; it’s a weighted blend of both until you force a measurement. And measurement doesn’t simply “read” the state the way a classical computer would. It collapses the superposition into a single outcome and destroys the rest. Underneath the classical facade, the universe is running on wave functions, amplitudes, and interference patterns, not on discrete binary states.

Quantum computing simply acknowledges the universe as it actually is, and endeavors to build a computer that plays by those same rules.

So what does a quantum computer do?

Instead of flipping tiny switches between 0 and 1, a quantum computer manipulates genuine quantum states. A qubit is a blend of both 1 and 0, with weights attached to each possibility. When multiple qubits interact, they become entangled, meaning the whole system has to be described at once rather than qubit-by-qubit. The computation is basically the controlled evolution of this joint quantum state. Only at the very end do you measure it, which collapses all those possibilities into definite 0s and 1s. In short, a quantum computer is a device that carefully shapes and evolves a multi-qubit wave until a final measurement extracts a usable answer.

We figured out we needed quantum computers in 1981 when Feynman observed that classical computers could not simulate quantum mechanics. The movement of 10 particles becomes 210 amplitudes, so any meaningful physical simulation quickly becomes impossible classically. In 1985, Deutsch published a formal model of a quantum computer. And we didn’t have to wait very long until the most famous QC algorithm was devised by Peter Shor in 1994. Shor defined a way in which QCs could factor integers and compute discrete logs in polynomial time. Incredibly, there was at this point still no functioning QC prototype in any meaningful sense. We just realized that large integers could be factored with (a theoretical) QC, and that at some point in the future, most public key cryptography would be totally compromised.

Aside from factoring large integers and simulating particles, there’s not much else we know quantum computers are good for. But unfortunately for us, the one thing they are good at is the thing that forces Bitcoin into a reckoning.

There’s a couple more concepts to establish about quantum computing before we dive in.

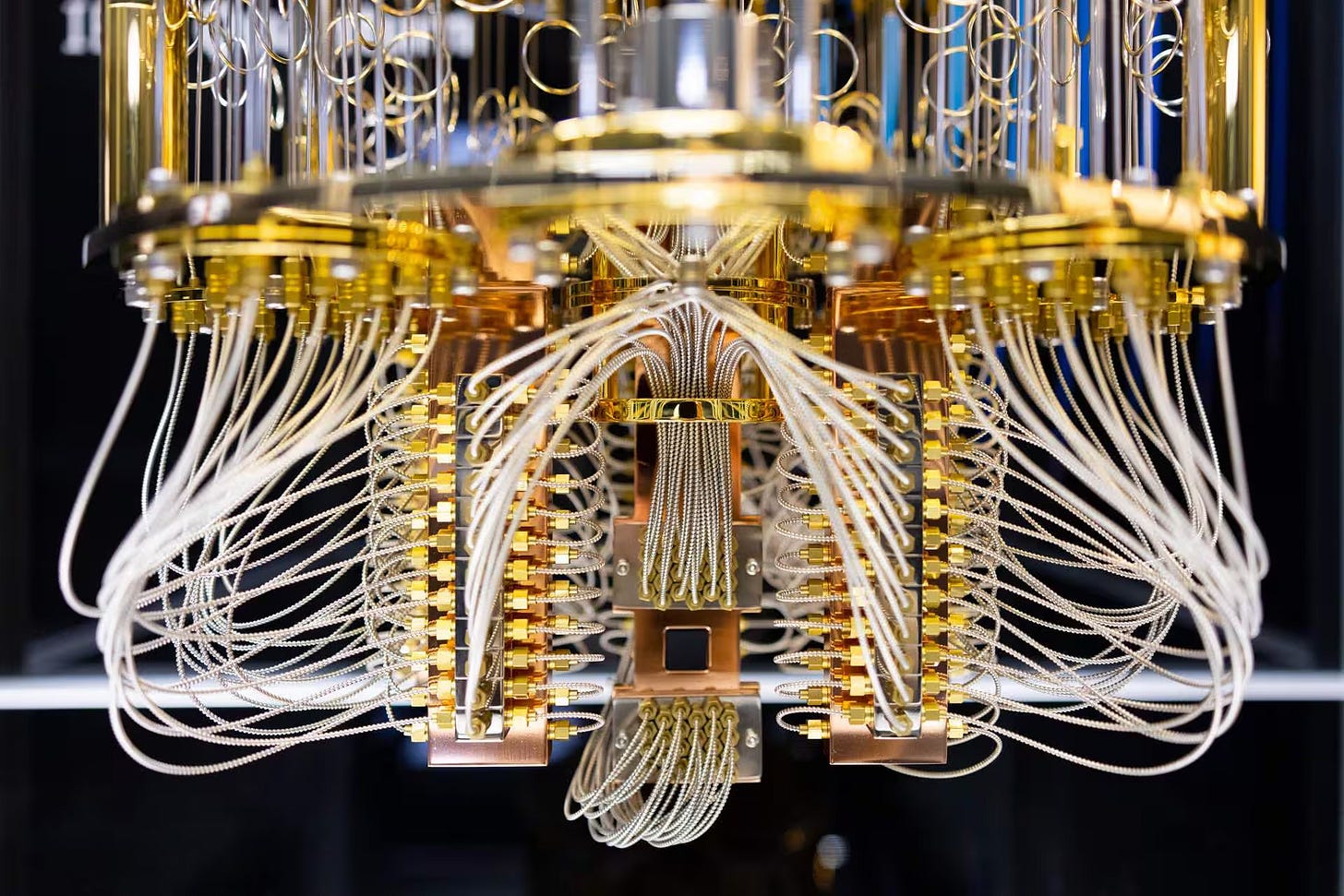

Physical qubits are the stuff QCs are made of: ions, superconducting circuits, photonic modes, neutral atoms. They are manipulated through pulses and lasers and microwaves. They are noisy, short-lived, and error prone. They interfere with each other and oftentimes, if you add more, the interference in the whole system gets worse. Physical qubits don’t stay quantum forever, and eventually decohere.

Logical qubits are the actual thing you want and are built from error-corrected physical qubits. Depending on fidelity – the odds of your instructions being faithfully carried out at each gate – you might need hundreds or thousands of physical qubits per logical qubit. More fidelity means fewer physical qubits required.

To do anything useful with a quantum computer, you need hundreds or thousands of logical qubits, which implies 100s of thousands to millions of physical qubits. The best systems we have today have maybe 1000 physical qubits and around a dozen logical.

Error correction is the real innovation that helps stabilize messy physical qubits to produce clean logical qubits. Every few microseconds, you run a sequence to check if groups of qubits are still satisfying certain conditions. This tells you where errors are occurring on the grid. You guess at what the problem is and apply a correction. If your error rates are low (your fidelity is high), each error correction cycle fixes more errors than it introduces. This is the whole ballgame. If error correction is real, useful quantum computing can exist.

If you can build a useful quantum computer, you might achieve quantum supremacy:3 a quantum computer’s ability to perform a task that a classical computer cannot.

Bitcoiners should care about quantum risks

Quantum computing is a very strange technology.

We know what it can do – factor large numbers, for one – and we can even describe exactly how it would go about doing that. We just haven’t built the right kind of hardware to run the algorithm yet. We’re not even completely sure it’s possible to build the hardware.

In some ways, quantum is the antithesis of AI. Quantum capabilities are already known and indeed provable, we just don’t know if we can scale to actually effectuate them. AI capabilities are unknown and potentially unbounded, and we don’t know where we’re going at all. But we do know that if we keep delivering certain inputs (data, training time, and compute), we can get predictably better outputs. We bake larger and larger models and then empirically discover what they can do. Quantum is a statue waiting to be freed from a block of marble; AI is a new lifeform being cultivated inside a petri dish.

There’s two things we know for sure quantum computing can do which classical computers cannot: break all encryption that relies on it being hard to factor numbers, and simulate quantum systems. Beyond that, we only have vague notions of where it might be commercially useful. Logistics optimization or portfolio simulation, maybe. Materials science exploration. Chemistry problems relating to drug discovery or new battery designs.

Quantum is also strange in that while it has enormous scientific promise – after all, we live in a quantum world but we’ve never been able to compute quantumly – its primary near-term application is immensely destructive. A huge chunk of our communications and internet infrastructure relies on RSA, elliptic curve, or Diffie-Helman-based crypto systems, and quantum dispatches all of them. In simpler terms, our entire networked society is built on the assumption that certain problems – factoring a product of two large primes and inverting elliptic-curve multiplication – are hard. (If you read Part I, you should have a good sense of why ECDLP is hard.)

The arrival of Q-day4 would be very positive for our ability to fundamentally understand physics and our universe, but it would also be momentarily calamitous, if we are unprepared. The emergence of a cryptographically relevant quantum computer (“CRQC”) – a quantum computer with a few thousand logical qubits – would force us to abandon most of our fundamental cryptographic infrastructure.

Some systems, like AES and hashing (you should be familiar with SHA-256) are partially degraded by quantum, but not catastrophically so. But all elliptic curve and integer-factoring based cryptosystems would be rendered obsolete.

The fallout would be immense. All encrypted communications will be presumed exposed. (Including pre-Q-day communications that adversaries were smart enough to harvest and wait to decrypt.) The entire web – TLS, HTTPS, server certificates – will have to be torn out and rebuilt. Every government, corporate network, bank, and hospital will have to retrofit their VPN and SSH infrastructure. Cloud infrastructure will have to be rebuilt. Firmware signing and HSMs will be borked. All encrypted messaging systems will have to upgrade. Oh, and all blockchains. Bitcoin, Ethereum, Solana, the lot.

We’ve known for a long time in a detached, academic way that quantum computing would cause unique challenges for blockchains and digital assets. I even put ‘quantum’ on my Bitcoin FUD dice in 2018. But quantum never felt real. It felt like one of those technologies like fusion that was “always 30 years away” with progress stalled due to fundamental limitations. Even today, there are many very smart people who I respect and admire that will tell you with absolute certainty that quantum is a fictional technology, and these kinds of arguments are akin to debating how many angels could dance on the head of a pin.

But as Bitcoiners it’s our duty to be exceptionally paranoid. Bitcoin has this baked into its development mindset. Even unusual and low-probability risks should be taken seriously. And the truth is that the reality on the ground has shifted. There have been fundamental breakthroughs in quantum this year. We are seeing new highs in physical and logical qubit counts. Promising new modalities have emerged. And more capital has been deployed to support quantum startups than ever before.

Quantum threatens the most basic assumption inherent to Bitcoin – that knowledge of a private key is equivalent to ownership of some UTXOs. Quantum entirely breaks this, allowing theoretically anyone to reverse-engineer a private key from a revealed public key. This is unacceptable and existential. The emergence of a CRQC would threaten Bitcoin existentially, not only directly by undermining the property rights of the system, but also by causing political divisions between rival factions who disagree about how to deal with the problem. And the sooner it happens, the worse the outcome will be. So it behooves us to start monitoring quantum progress today, and planning for Q-day as the odds of a CRQC creep higher.

The creation of a CRQC would be bad for Bitcoin

As we discussed in the first post in the series, Bitcoin depends on elliptic curve cryptography, and the assumption that the elliptic curve discrete log problem is hard. Both ECDSA and Schnorr (introduced with Taproot in 2021) are built on ECC and rely on the secp256k1 curve. The one thing we know for sure a quantum computer can do – at sufficient scale, say 2000 logical qubits – is to reverse engineer a private key from a known public key. Recall from our first episode that when we create a public key we start with a private key k and multiply it by a set point G on an elliptic curve, giving us a public key P. Inverting the process is meant to be virtually impossible. Classically, to reverse-engineer k from P given a known G would require simply brute-forcing and trying every single permutation of k until you stumble on the right P. For a 256 bit number, this takes 2128 operations. Impossible.

Shor’s algorithm running on a sufficiently large quantum computer undermines this completely. In somewhat mathematical terms: “Shor extracts the discrete log by converting the elliptic curve structure into a frequency-domain period problem, and the quantum Fourier transform pulls the private key out of the symmetry.” In plainer language, the problem is to try and find out the number of steps away public key P is from set point G. Finding this out classically is presumed impossible. The QC running Shor prepares a superposition of all possible combinations of steps between G and P, evaluates the function on those values in one physical evolution, and the only stable surviving pattern is the periodic structure from which k can be extracted. It does not “compute all values of k” in parallel. It holds a weighted blend of all possible inputs simultaneously, evolves one wavefunction containing those possibilities, and highlights a specific pattern of interference.

With a quantum computer of around 1500-2000 logical qubits and maybe 10m physical qubits and a long enough runtime (hours, days), it’s suddenly plausible to turn your public key

02 4033389cf6632a172546748fda79e7fbabce944b552db5dba81b16c01fec377bback into your private key

50d858e0985ecc7f60418aaf0cc5ab587f42c2570a884095a9e8ccacd0f6545cTo make matters worse, Shor’s algorithm runs in time polynomial in the key size, rather than exponential. For elliptic-curve discrete log on a 256-bit curve, “vanilla” resource estimates are on the order of 10¹¹ Toffoli gates for P-256. More favorable architectural assumptions bring this down to 50 million Toffoli gates per key5. So Shor inverts the scalar multiplication which was meant to be extremely difficult. And, in fairness, it was. We had to invent an entire new branch of physics and then carefully arrange atoms in entangled quantum states to create little miniature computers in order to break it.

What does this mean for Bitcoin?6

We will break it down by coins at rest and coins in transit, since considerations are different. Starting with inert coins, the good news is that Satoshi erected a first line of defense against a quantum attacker by twice hashing public keys to create addresses. Remember how in our first article, we converted the above public key into the address 17oFBXab… by hashing it twice? Quantum computers can break ECC256 but they can’t realistically reverse-engineer SHA2567 in a useful way. So as long as your coins are in an address format that is hashed, they shouldn’t be exploitable by a quantum attacker (with caveats that we will explore shortly).

The coins to worry about are those where the public keys are visible in the locking script, namely pay to public key (p2pk) and pay to multisig (p2ms), an obsolete multisig format. You can ignore p2ms for now because it’s not the common multisig address format any more. P2pk is the problematic one as it stores 1.7m BTC or 8.3% of all outstanding coins. These coins almost all date back to the very earliest days of bitcoin. The coins that are presumed to be Satoshi’s are sitting in about 20k different p2pk addresses each with 50 BTC. Here’s one example: Block 13 from 2009. In that transaction, Satoshi (we suspect) received 50 BTC directly from the block reward, as an early miner. The whole block is one transaction straight to an uncompressed public key

04c5a68f5fa2192b215016c5dfb384399a39474165eea22603cd39780e653baad9106e36947a1ba3ad5d3789c5cead18a38a538a7d834a8a2b9f0ea946fb4e6f68visible on the blockchain.

And there’s tens of thousands of these. Satoshi never consolidated their coins, never moved them over to compressed (hashed) addresses. Satoshi certainly could have done so, knowing the risks. We know that Satoshi was aware of the possibility of a quantum break to ECC. Satoshi suggested that we could move to a new signature algorithm if necessary, but seemed fairly unconcerned about the topic in their handful of posts about it. For whatever reason, Satoshi left these coins in these exposed wallets, and there they sit.

We need to dwell on this for a moment. The fact that 1.7m BTC are sitting in exposed, presumably abandoned p2pk addresses from the early days of Bitcoin is a gigantic problem. No matter what happens with Bitcoin – whether through some miracle we upgrade to a post-quantum signature scheme in an orderly manner – these coins will still be at major risk in the next decade. And unless you think Satoshi – and anyone else from that era that may have lost their keys on an old hard drive – is coming back to rescue their coins in the nick of time, those coins are just a gigantic, hundred-plus-billion-dollar bug bounty to be consigned to whoever develops a CRQC first.

Bitcoin’s protocol can upgrade, yes, but there is no way to go back in time and force those early users to move their coins into more up-to-date address formats. The only thing the Bitcoin community could plausibly do is simply soft fork and decree that those old p2pk coins are unspendable – but this would be a kind of a collective confiscation by mob rule, which is also contrary to a core Bitcoin value. So no matter what, the emergence of a CRQC throws us into a serious dilemma: do we accept that Satoshi’s coins will likely be stolen by whoever happens to develop QC first, or do we violate Bitcoin’s property rights and burn or immobilize the coins? And if we decide to forcibly upgrade those coins to new address formats, or we burn the old coins and create a claimable reserve, how then do we determine who their true owner was? Our previous test for whether Satoshi was genuine or not was a signature matching early coins; but quantum makes this form of proof irrelevant too. So we would have no way to determine, post Q-day, whether someone would be an authentic claimant.

These attacks we are talking about are called “long range attacks” because the attacker has basically unlimited time to reverse-engineer the private key from a known public key. In quantum computing, this is a big deal, because there’s an inverse relationship between time needed to run a program and qubit counts.

Coins held in newer Taproot addresses are also potentially exposed. This is a little shocking because Taproot is a newer addition to Bitcoin, so the engineers behind that probably should have foreseen the risk. I’m going to cite the Chaincode report here regarding p2tr because I am not an expert and its complicated, so if I’m wrong, you can go yell at them instead:

Pay to Taproot (P2TR), introduced in the 2021 Taproot soft fork, exposes public keys in a different but equally vulnerable manner. P2TR provides two spending mechanisms: the keypath and the script-path. The key-path allows spending using a signature from a “tweaked key” (a public key combined with a commitment hash of possible script conditions), while the script-path requires revealing and satisfying one of these predefined script conditions. Because the tweaked public key is exposed on the blockchain, a CRQC could potentially derive the corresponding private key, enabling unauthorized spending via the key-path without needing to satisfy any script conditions. Unlike P2PK and P2MS, P2TR’s vulnerability could be addressed through a soft fork that disables key-path spending if quantum threats emerge […].

So unfortunately, Taproot addresses as currently contemplated might expose your public key as well. There’s 185k BTC in p2tr addresses today.

And this is just one class of problem.

The next major issue is that, as we discussed in the first post, whenever you spend Bitcoin, you reveal the public key of the spending wallet on the blockchain. This means that if you have 1000 BTC in a big coldwallet and you send 1 BTC out, the public key for that remaining 999 BTC is now visible.

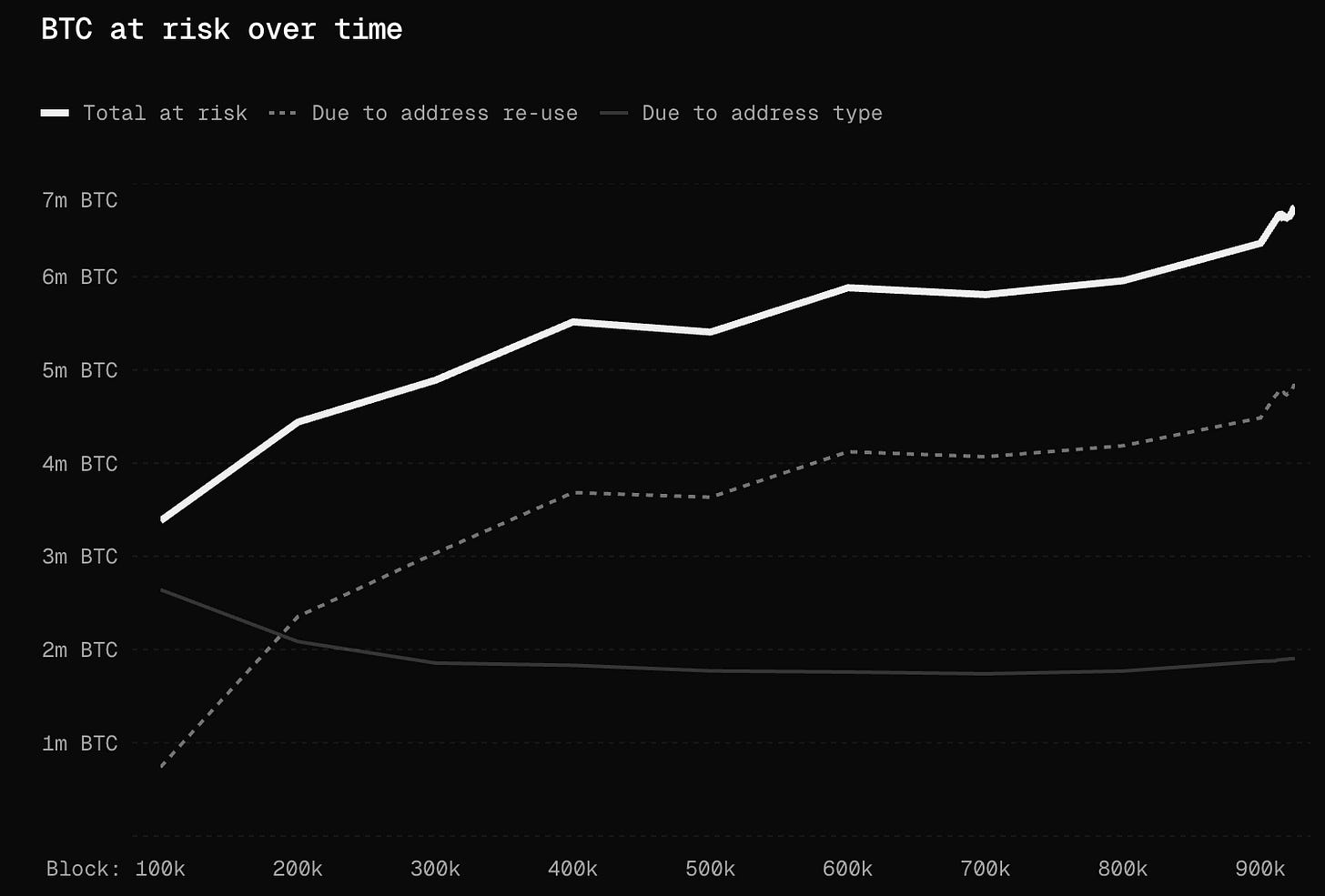

So even if your coins are in a hashed or compressed address type (these are probably where your coins are being held right now), the moment you actually spend from that address, your public key is exposed. This is called address re-use and it’s discouraged in Bitcoin, but it happens rampantly. And address reuse is actually responsible for more quantum exposure than ancient coins in legacy address types.

Project Eleven has a nice dashboard tracking this. As of today, there are about 4.8m BTC in addresses with exposed public keys due to reuse, and 1.9m BTC in legacy or Taproot addresses that are also exposed.

That’s almost precisely one third of all circulating BTC (or $579b worth) which is vulnerable to a quantum computer today. If you had a CRQC today and you wanted to cause maximum chaos, you wouldn’t even go after one of the thousands of 50 BTC address lots from the Satoshi days. You could instead go for the biggest prize: the Binance coldwallet 34xp4vRoCGJym3xR7yCVPFHoCNxv4Twseo with an exposed public key and 248k BTC in it.

If that doesn’t worry you, there’s a final class of problem which applies to literally all addresses, no matter how good your hygiene.

This final class of exploit is called a short range attack, and it applies in the brief moment after you have broadcast a transaction to the blockchain and before it has settled. No matter what address you’re spending to or from, once you broadcast a transaction, your public key is exposed. In the 10 minutes or so it takes for the transaction to be included in a block, a sufficiently powerful quantum attacker could sniff out your transaction from the mempool, reverse-engineer your private key, and re-broadcast the same transaction to themselves with a higher fee, causing it to get redirected mid-flight. This requires much more powerful quantum computing hardware, and the first attacks are expected to take hours or days, but it’s worth mentioning as a further theoretical vulnerability.

If it sounds grim, it is. Bitcoiners have scarcely contemplated quantum risks to date, especially because many developers believe quantum is still sci-fi technology or decades away. However, if it’s less than a decade away, we have to start planning now. Virtually everything I’ve discussed in this section can be addressed (except perhaps the Satoshi coins element), but we have to start the process of deliberation now, so that we can move on to selecting a specific class of PQ-signatures, proposing an upgrade to the protocol, and giving users time to opt in.

Bitcoin upgrades are notoriously tricky and slow: SegWit was first formally proposed in March 2015 and only adopted in August 2017. Taproot was conceptually laid out in early 2018 and only activated in late 2021. Any post-quantum soft fork would be a far more fundamental change and would be wildly more complex. It would rip out and replace the essence of the system which is ECC. If we want this implemented by 2030, we have to start thinking about it now. And based on all the data I’m seeing, I think this is warranted.

Why it’s reasonable to be concerned about quantum

In this section I’ll present ten arguments supporting my view that CRQC’s will emerge in the meaningful near future, as in, by 2035. These are arranged in decreasing order of importance – as in, the most persuasive arguments (in my view) come first. I’m not the only one who has noticed. Public markets are in a quantum frenzy. Venture investors have dumped more cash into quantum startups than any other year in history. The CEOs of big tech firms have revised and shortened their quantum timelines. Governments are preparing for Q-day. But instead of appealing to authority or to efficient markets, I’ll try to summarize all the datapoints which caused me to revise my view from “completely off the radar” to “something Bitcoiners (and other coin-ers) should prepare for, starting today.”

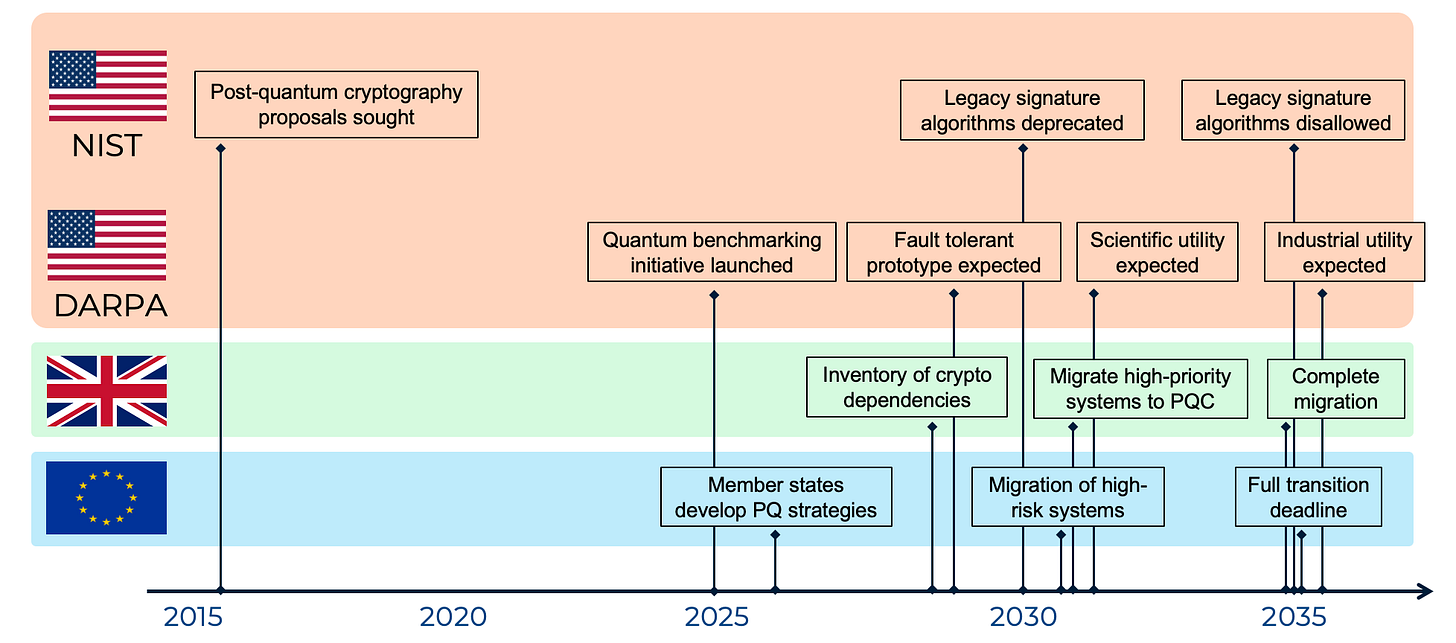

1. Governments are planning for a post-quantum world

Major standard-setting bodies like the National Institute of Science and Technology (NIST) have been contemplating the move to post-quantum (PQ) signatures for a while now. Because cryptography operates on decades-long timelines (it is the ultimate foundation of a huge amount of infrastructure, and ripping out and replacing infrastructure is a necessarily slow process), NIST is planning decades ahead. They take no view on when specifically CRQCs might emerge, but point out that since their timelines are long, they are forced to care:

While in the past it was less clear that large quantum computers are a physical possibility, many scientists now believe it to be merely a significant engineering challenge. Some engineers even predict that within the next twenty or so years sufficiently large quantum computers will be built to break essentially all public key schemes currently in use. Historically, it has taken almost two decades to deploy our modern public key cryptography infrastructure. Therefore, regardless of whether we can estimate the exact time of the arrival of the quantum computing era, we must begin now to prepare our information security systems to be able to resist quantum computing.

In 2016, NIST kicked off a process to solicit proposals for quantum-resistant public key algorithms, understanding that both ECC and RSA algorithms were doomed if CRQS emerged. Last year, they published their first public draft detailing their new PQ public key algorithms and laying out a timeline. The timeline is eye opening. They suggest deprecating legacy signature algorithms like ECC256 in 2030 and fully disallowing them by 2035.

NIST has some proposed post-quantum signatures which could be swapped in for ECDSA like ML-DSA but this comes with huge tradeoffs, like a 38x increase in data overhead. Without a further increase in blockspace, swapping in ML-DSA would hammer Bitcoin’s scalability, reducing it by an order of magnitude to around 1tx/sec.

Both the Biden and Trump administrations have released executive orders covering quantum preparedness (although the more recent Trump EO from June 2025 was mostly paring back language from the prior EO 14144).

A couple quantum experts that I interviewed for this piece told me that DARPA’s Quantum Benchmarking Initiative was actually the most telling US government program, as opposed to the NIST guidelines. DARPA’s QBI was launched in 2024 to determine if a “utility-scale” QC (in practice, this would mean a CRQC) could be built by 2033. They expect to have the answer by 2028-29. The implicit (and sometimes outwardly stated) goal of the “US2QC” program is to, in their words “reduce the danger of strategic surprise from underexplored quantum computing systems”. Put simply, useful QC undermines almost all communications infrastructure and creates a massive first-mover advantage. So the US Government wants to know precisely whether this is feasible and if so, when.

So far, they have selected 18 companies, some of whom will qualify for meaningful funding under Stage 2 as they attempt to build a utility-scale QC. In a talk given by DARPA quantum lead Dr. Joe Altepeter this summer, the agency outright said that they had accelerated their quantum projections, expecting a fault tolerant prototype in 2027, scientific utility between 2030-32, and industrial utility as early as 2034.

And on November 4th, the Department of Energy joined the party, announcing $625m in funding to advance quantum research. And most recently, the U.S.–China Economic and Security Review Commission, a congressional watchdog that monitors China, released their annual report in which they mention quantum 84 times. The Commission does not mince words, expressing the belief that China has “mobilized state-scale investment and industrial coordination to dominate quantum systems” and “is actively racing to develop cryptographically relevant quantum computing capabilities and is likely concealing the location and status of its most advanced efforts.” They suggest that Congress provide meaningful funding to quantum initiatives and support to the private sector.

Virtually every major government besides the US is moving at a similar pace. Britain’s National Cyber Security Centre has called for an inventory of crypto dependencies by 2028, a migration of high-priority systems by 2031, and a full transition by 2035. The EU’s summer 2025 guidance calls for a post-quantum strategy at the nation state level by 2026, the migration of high-risk systems by 2030, and a full transition by 2035. China, perhaps wary of adopting a PQ scheme that has been backdoored by the NSA, is undertaking their own PQ standardization process, and will likely settle on different PQ signatures. That said, they are operating on a similar timeline: 3 years to settle on standards, 5 years for large-scale migration, and 10 years for full consolidation.

While it is the job of cryptographic standard setting bodies to look into the distant future and be paranoid about breaks in major cryptographic infrastructure, the fact that several different major nation state have independently settled on the “deprecate by 2030, disallow by 2035” timeline is quite telling to me. This doesn’t seem like a precaution taken for a technology which is still decades away.

2. Qubit counts are scaling rapidly

As we mentioned, the sheer number of physical qubits is not the only variable that matters in terms creating a CRQC. Whether a QC can do useful work is a function of multiple interlocking variables, some of which include

The number of physical qubits in an array

Gate fidelity, or the likelihood a measurement gives the correct result

Coherence time, or how long a qubit can maintain its quantum state

Connectivity, or how many qubits can interact with each other

Gate speed, or the latency per operation

Error correction throughput, or the amount of time it takes to read and correct errors

Physical qubits themselves are not the goal, which is why most quantum companies express their roadmaps in logical qubit terms – physical qubits which are error-corrected and stable enough to perform useful computation. You could have a QC with a very large number of physical qubits, but if they have a poor fidelity your computer won’t be able to do useful work. If your quantum system has a low coherence time, you won’t be able to run a complex algorithm before your qubits decohere.

Moreover, different quantum systems are not strictly comparable on a physical qubit basis. Major approaches to QC have different fidelities and coherence time, so just looking at physical qubits doesn’t give you much information. In a thousand-physical qubit system, variance in the error rate could mean that you get between zero and dozens of useful logical qubits.

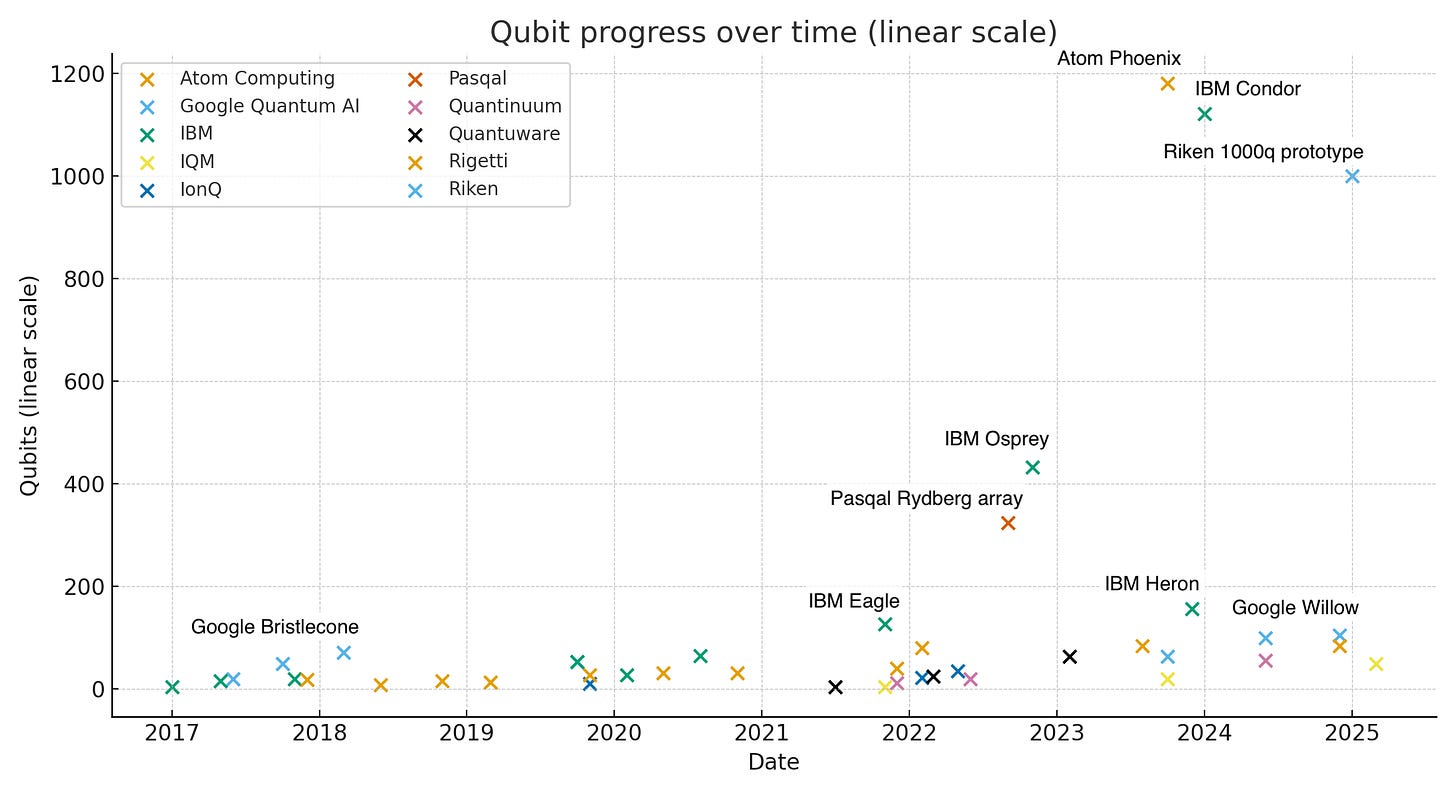

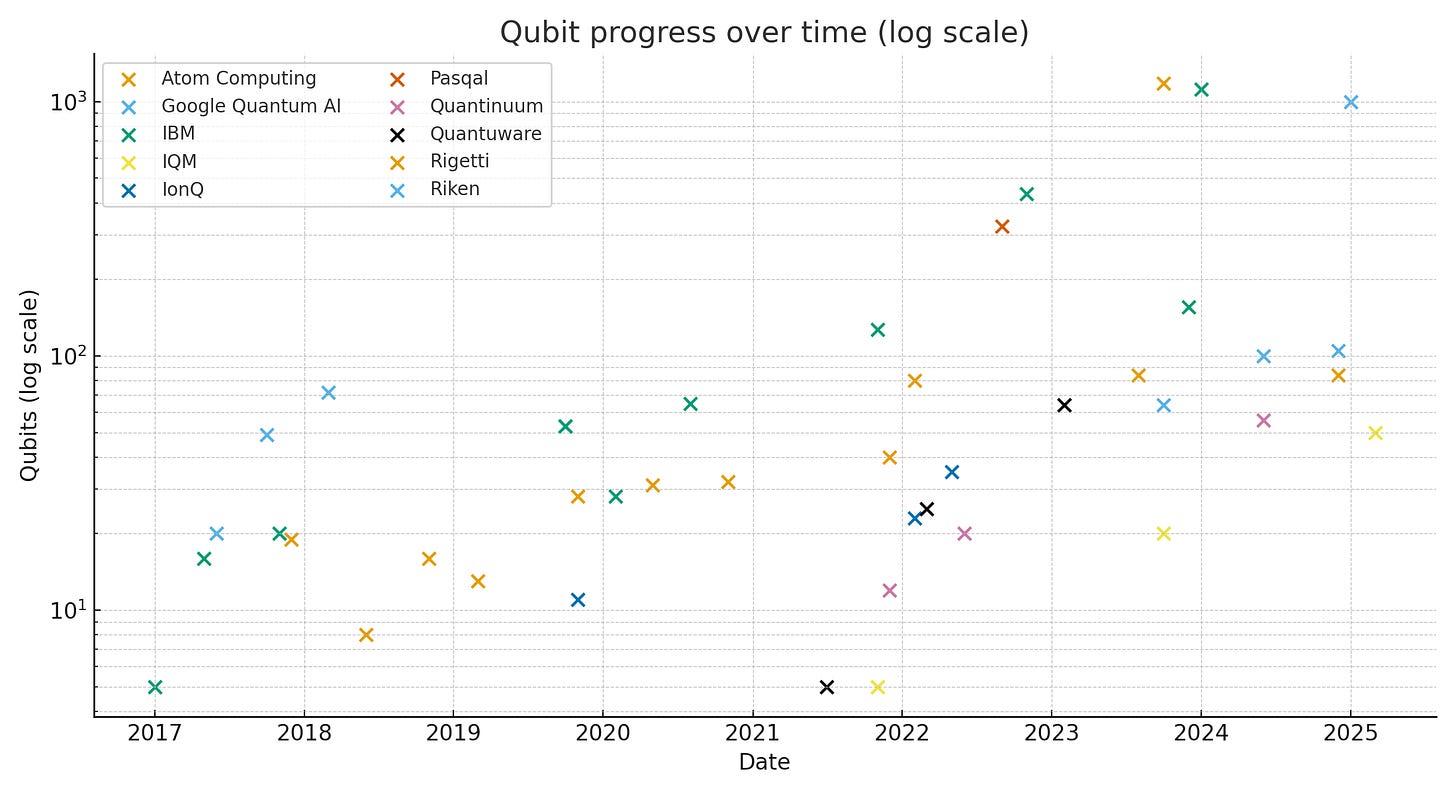

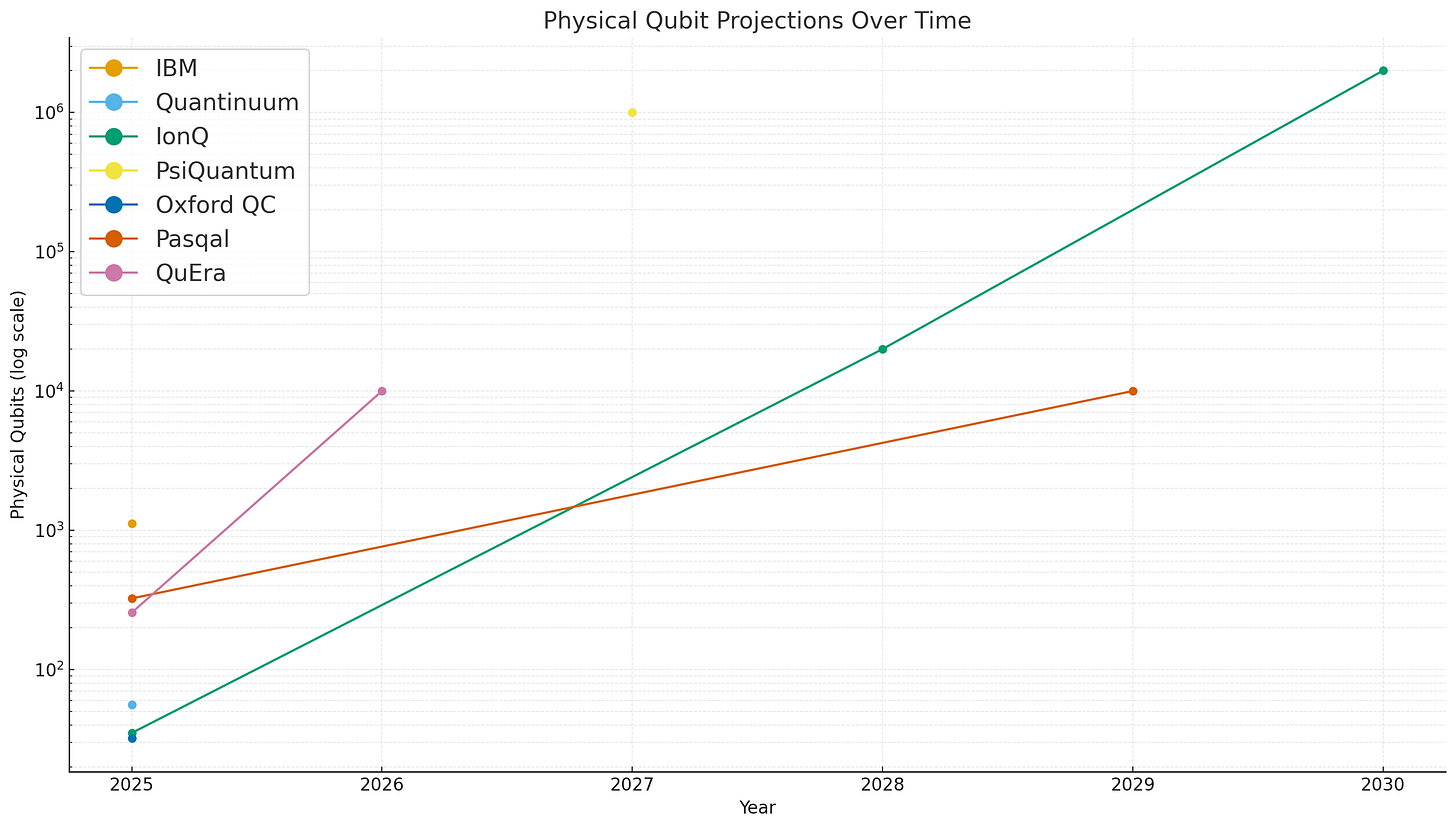

All of that said, looking at physical qubit scaling over time within the same approach can tell you about the development of the field. And we certainly have seen growth on that basis.

Today, platforms like neutral atom have been able to build arrays of 1000 physical qubits. Trapped ion approaches have many fewer qubits but demonstrate much better fidelity.

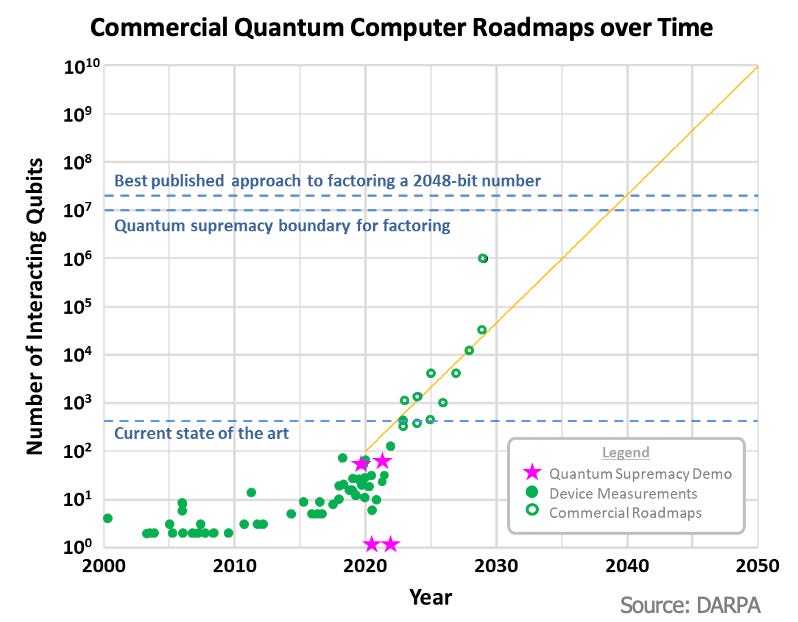

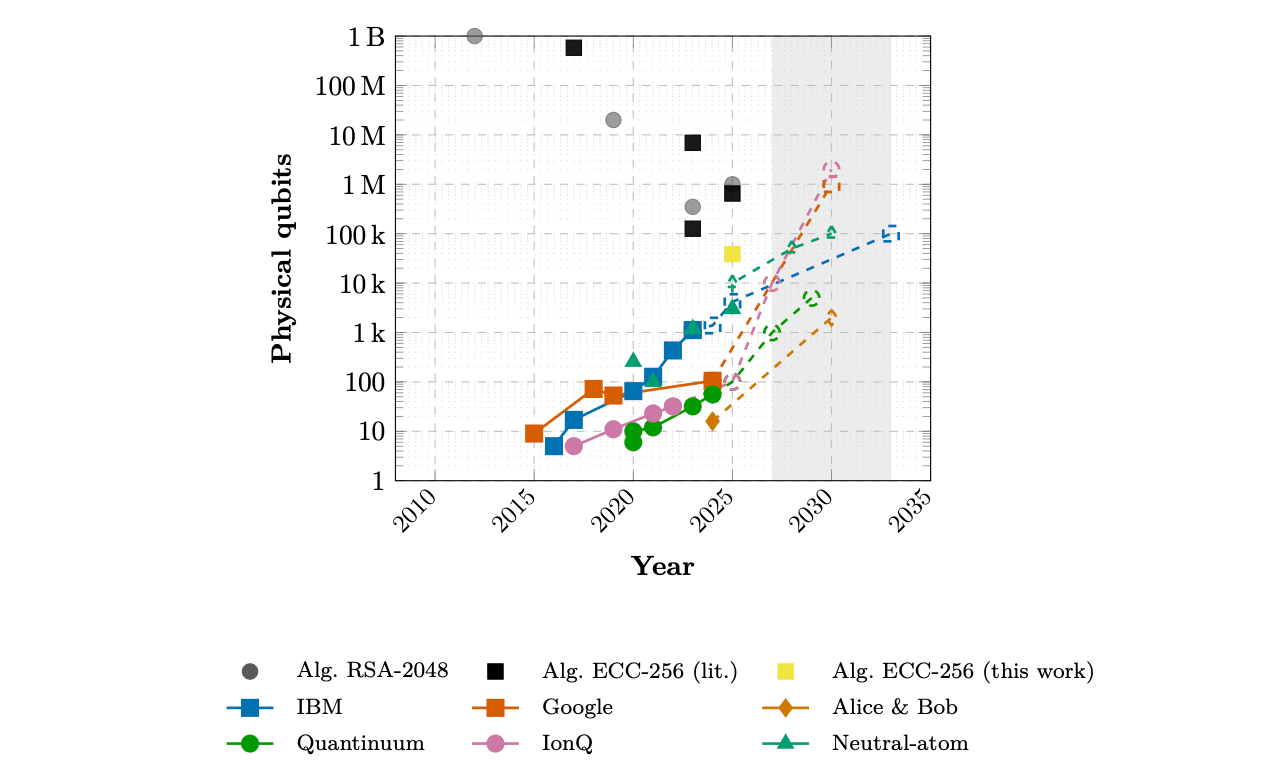

As you can see on the log chart, physical qubits are scaling through the orders of magnitude. Based on current fidelity rates, you’d need perhaps a million physical qubits8 to obtain enough logical qubits for a CRQC, which is three orders of magnitude away.

As far as logical qubits are concerned, the best systems have only achieved a few dozen logical qubits so far (and even those are disputed), so no trendline is observable yet.

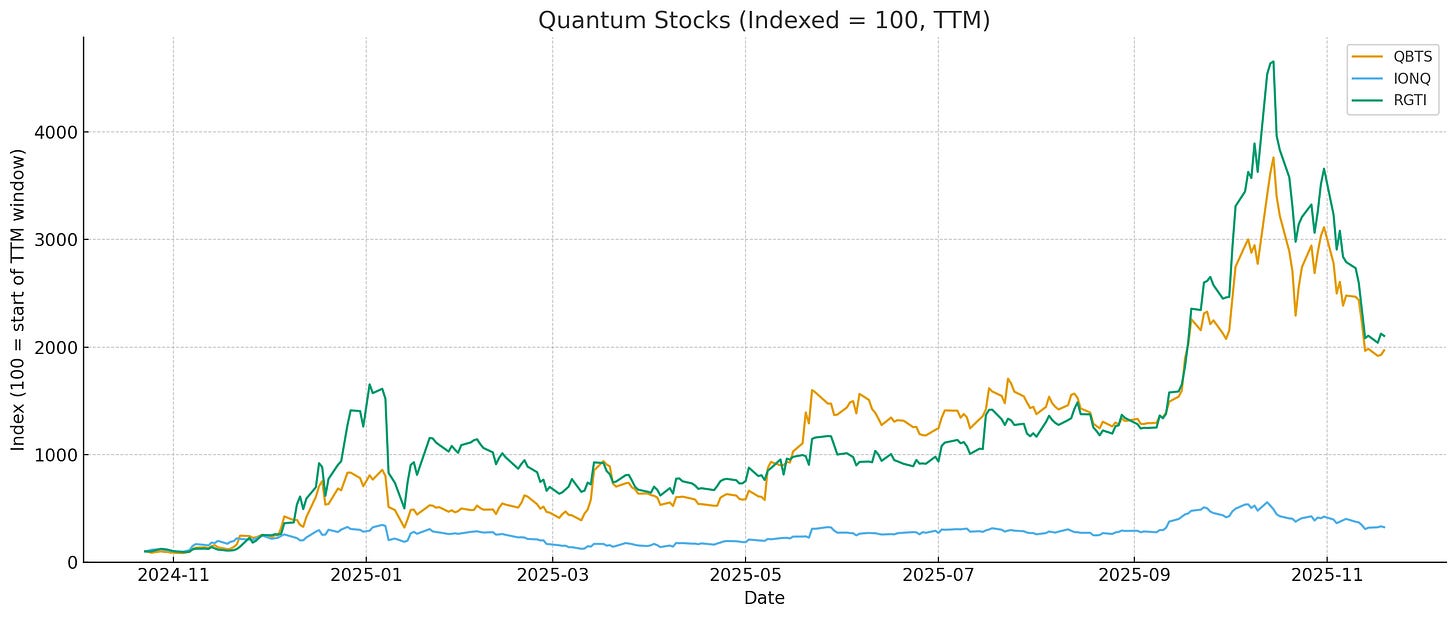

3. Investment in quantum firms is inflecting

It’s been hard to miss the rally in public quantum stocks this year. Standouts Rigetti (RGTI), IonQ (IONQ), and D-Wave Quantum (QBTS) are collectively worth $33.61b having begun the year at $18.7b. In the last 12 months, despite a sharp selloff recently, Rigetti and IonQ experienced blistering rallies in which their stocks surged by factors of 21x and 19x, respectively.

This rapid appreciation has created a cash spigot for these firms. IonQ has raised over $1.3b this year based on the rally in the stock, as well as using their equity for stock-based acquisitions and for hiring. D-Wave quantum, a struggling 2022-era SPAC, was on its last legs in 2024 before quantum hype hit. It was able to raise via an $400m ATM facility filed in 2024. Rigetti was a 2021 SPAC that suffered a 96% drawdown after listing but staged an impressive recovery in 2025, surging 50x from its 2024 lows. In mid 2025, they were able to take advantage of their appreciation to raise $350m in an ATM offering. And Infleqtion went public through a $1.8b SPAC that same month.

Though many believe the appreciation of these companies (which have de-minimis recurring revenue) is a retail mania, they have been able to turn the rally into very real cash hoards that they can redeploy into R&D.

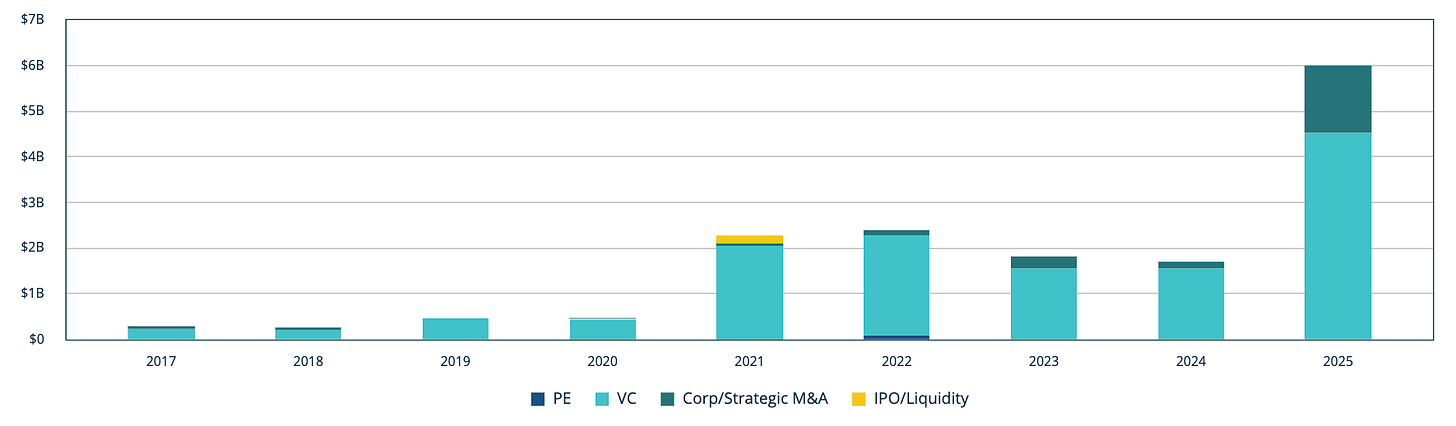

In private markets, investment in quantum startups is also inflecting. 2025 was by far the most active year ever for quantum startup funding.

According to Pitchbook, while deal count has remained relatively static, 2025 was a banner year in VC financing for quantum deals at $6b of capital raised. Notably, PsiQuantum raised a $1b Series E on $7b pre led by BlackRock, Temasek and Baillie Gifford. PsiQuantum is explicitly targeting a million-qubit machine based on photonic qubits, aiming to go for mass scale via existing semiconductor supply chains. I’ll note that PsiQuantum cofounder Terry Rudolph attended and spoke at the Presidio Bitcoin Quantum Bitcoin summit this year. Honeywell’s subsidiary Quantinuum also raised $600m at a $10b pre-money valuation in a round led by Quanta Computer, NVIDIA, and QED.

Meaningful quantum R&D is also taking place at IBM, Microsoft and Google, even though it’s hard to break out how much internal investment is dedicated to the theme. There does appear to be real buy-in at the top though. Google’s Sundar Pichai said this year that “the quantum moment reminds me of where AI was in the 2010s, when we were working on Google Brain,” adding that he thinks practical QC is 5-10 years away. Microsoft’s Nadella has been more effusive, saying of Marjorana 1 “We believe this breakthrough will allow us to create a truly meaningful quantum computer not in decades, as some have predicted, but in years.” IBM is perhaps the most bullish of the big 3, forecasting genuine quantum supremacy (for certain applications) in 2026 and the first large-scale, fault-tolerant QC in 2029. Their roadmap sees them building a CRQC in the early 2030s.

Even disregarding the huge budgets R&D Microsoft, IBM, and Google are capable of throwing at quantum, or government research funding, it looks like private investors forked over around $10b of fresh powder in 2025 alone, a massive acceleration compared to prior years. I interpret this in two ways:

Sophisticated investors have decided that within their investment timelines (generally 10 years or less for VC), quantum computers will be economically relevant

Even if quantum was technologically stagnant prior to 2025, the mere addition of attention and capital to the theme has made it fundamentally more likely to work

Of course, there’s still the possibility that quantum is the subject of an investment bubble, and that Rigetti, IonQ, and D-Wave give up all of their gains. On the private side, even sophisticated investors are not immune to thematic mini-bubbles. Those who remember recent history might think of cleantech, mobility, shale, biofuels, 3d printing, NFTs, and so on.

In fact, I think it’s probably true that excitement around quantum has outpaced the fundamental growth of the technology – and certainly the ability for investors to monetize quantum on venture time horizons. But I think both things can be true: that quantum timelines are shortening, and that quantum is the subject of a mini investment mania. As AI bulls will remind you, fundamental technology shifts are more often than not accompanied by investment bubbles, but this doesn’t discredit the underlying technology. (I would argue that they are bicausal – that inflecting fundamentals catalyze investment manias, and that the manias themselves help accelerate the fundamental growth of the technology, even if much of the capital is misallocated). And what we are interested in here is whether quantum computers can become cryptographically relevant, not whether quantum stocks are worth owning.

4. Several major quantum milestones have been achieved this year

In a lot of my discussion with quantum experts they kept reinforcing that things seem to have changed this year, especially around gate fidelity.

In June, a University of Oxford team demonstrated single-qubit gate error rates of 10-7. In October, IonQ demonstrated two-qubit gates with 99.99%+ fidelity. Quantinuum’s Helios result – a 98-qubit trapped ion processor with 99.921% two-qubit gate fidelities – was considered particularly impressive. Gate fidelity is crucially important. Every additional 9 after the decimal cuts the number of physical qubits required for useful computation by an order of magnitude. If you have 10-4 error per gate, you can run 10k gates before failure, versus 1k for 10-3. So 99.99% gate fidelity is generally considered the threshold demarcating toy and actually useful algorithms. And it looks like we are starting to cross that threshold.

Google’s Willow result (technically December 2024) was particularly important. Google showed that as they increased the encoded lattice size of physical qubits in a surface code, the logical error rate decreased in an approximately exponential fashion. One of the major stumbling blocks has been that average error increases with physical qubit count; the Google processor was one of the first times the opposite has been demonstrated. Quantinuum and QuEra also unveiled impressive results along those lines.

As one PHD told me: “error correction has been theorized since the 90s, but it has never been proven experimentally. 2025 was the first year in which we knew for sure it was working.”

On the algorithmic side, I’ll get into this further in part 6 of this section, but the Gidney result for RSA2048 was particularly notable. In June, Gidney released a paper requiring only 1500 logical qubits and fewer than 1m physical qubits to factor RSA2048 in a week, a significant improvement from his 2021 estimate (4000 logical/20m physical/8 hours).

RSA2048 is considered harder than ECC256 to break, so even though a comparable result to ECC256 (around 2000 logical qubits is still required) has not been found, Gidney’s paper demonstrates that algorithmic improvements can be made to further reduce required qubit counts.

On the actual capabilities front, startups like PsiQuantum breaking ground and actually starting to build large-scale quantum mainframes also got people’s attention. On the heels of a $1b round of funding, PsiQuantum announced that they would deliver a million-qubit commercial-grade QC by the end of 2027. There are still known and unknown unknowns with their approach, but they have shown intent to actually scale up their approach rather than just staying in the lab. So things have started to move from demonstrations to the actual construction of what are envisioned as utility-scale quantum computers.

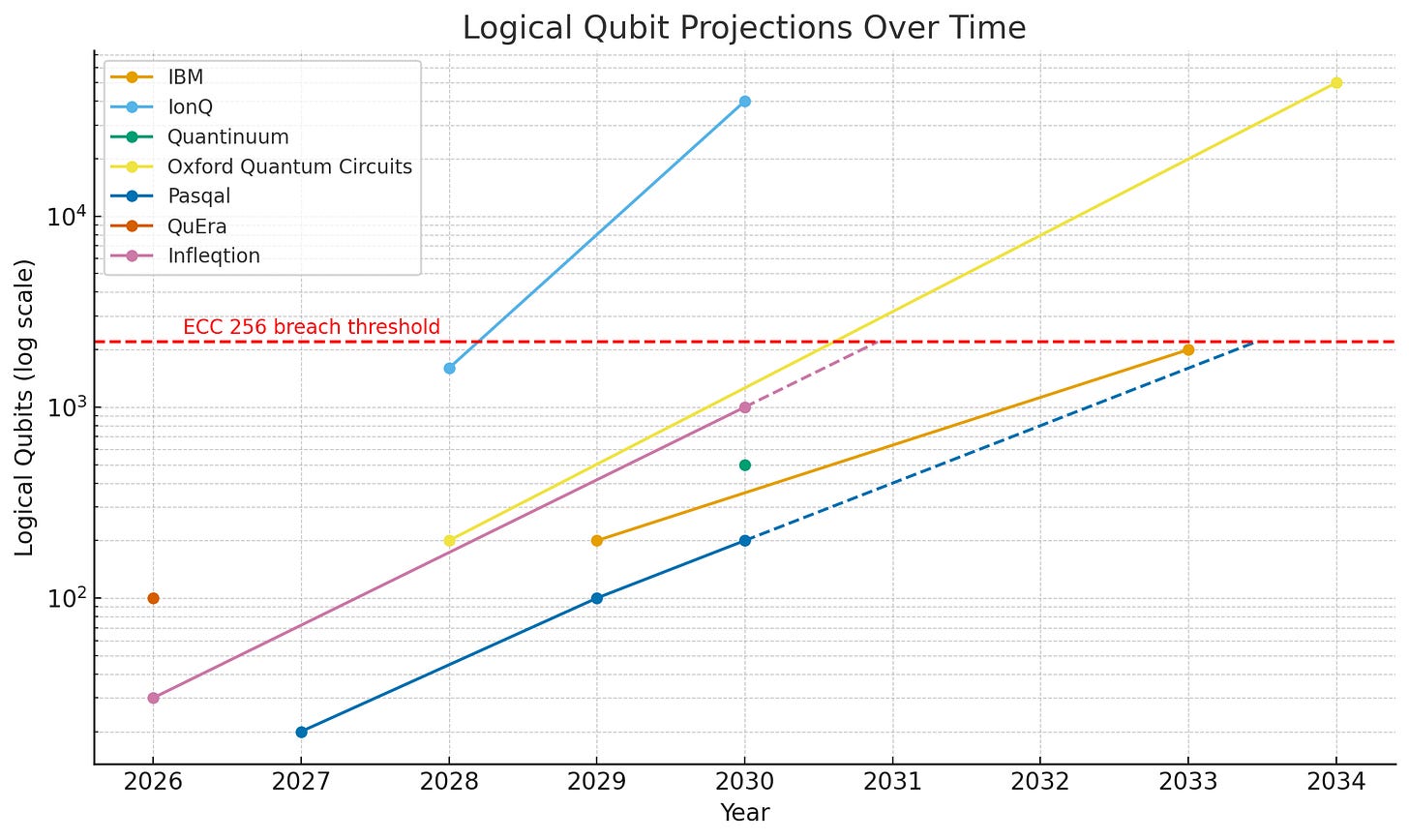

5. Quantum firms project breaking ECC by 2028-2033

While company projections should always be treated with caution, since they have a natural incentive to exaggerate their performance and roadmap, I thought it would be useful to aggregate what the major players in QC have been projecting. The industry has moved away from talking about their capacity in physical qubit terms and is more focused on logical qubits, since the efficacy of physical qubits is so dependent on error correction which is highly variable. Trendlines are depicted with dashed lines.

According to the stated projections of major quantum firms, the industry expects to reach ECC256 breach capability (2000 logical qubits on this chart) between 2028 and 2033. For context on where we are today, Quantinuum unveiled a 48 logical qubit quantum computer on Nov. 5.

These projections are consistent with the responses I got from the physicists that I interviewed for this article. Most of them told me that they expected a CRQC in the early 2030s. Some of them even assigned a low but nonzero probability to the idea that a CRQC might exist already (having been covertly produced by a secretive firm or government).

Some companies have made physical qubit predictions as well, but these are harder to associate with an ECDLP break because there’s no specific number of physical qubits that corresponds to a logical qubit.

All of that said, with today’s fidelities, you’d be looking at a couple million physical qubits (the very top of this chart) to achieve 2000 logical qubits, and only IonQ and PsiQuantum are even projecting numbers that high.

DARPA also released a version of this chart in July 2025 in which they expect around 1m physical qubits to be the threshold for a CRQC, although they did express that their timelines were moving earlier.

6. The number of qubits needed to break crypto systems is dropping

The other problem is that not only are quantum computers expected to get better in the coming decade, but it’s reasonable to expect that the computational demands for cracking ECC via Shor get easier.

The number of logical qubits needed to break ECC256 with Shor is fairly well established: 2330 based on Roettler et al (2017). To go from a logical qubit count to an estimate of physical qubits, you need to bake in error rate assumptions (lower error rate = fewer physical qubits needed per logical qubit), and you can tighten or relax time requirements as needed (longer time = fewer physical qubits needed).

As time has passed, algorithmic improvements have brought down the number of physical qubits theoretically required. In 2022, Webber et al postulated 3.17 * 108 or 317m physical qubits to break ECC256 within one hour or 13m to break it within 24 hours with an assumption of a 0.001 physical gate error. Of course, if gate error rate can be further reduced, the number of physical qubits continues to fall.

In 2023, Daniel Litinski of PsiQuantum proposed a mere 6.912m physical qubits for a 10-minute private key derivation, a significant improvement on earlier proposals.

In their 2025 paper, Dallaire-Demers, Doyle, and Foo standardize the physical qubit requirements for ECC256 and RSA found in the literature, finding four orders of magnitude drops in requirements between 2010 and 2025 for both cryptosystems (the grey dots and black squares on the below chart).

In that same paper, the authors derive their own estimate of the number of physical qubits to break ECC256, placing it in the 100k-1m range. As a reminder, the top labs are only able to produce 1k physical qubit processors today, so we are “only” two to three orders of magnitude away from a break. And remember, this convergence can happen through both algorithmic reductions in requirements or better hardware.

Based on demonstrated improving capabilities from top quantum labs and disclosed roadmaps, Dallaire-Demers and his colleagues simply extrapolate the falling physical qubit requirements for a CRQC alongside rising capabilities and expect the first intersections to occur between 2027 and 2033.

And if you want to sanity check this, simply refer to IBM’s own website: they predict that their Blue Jay system expected in 2033 will run circuits of 1 billion gates and 2000 logical qubits, enough to break ECC256.

7. Bitcoin is itself a bug bounty for quantum supremacy

This is an obvious one. Bitcoin is the largest concrete “buyer” of an industrial-scale quantum computer’s product. Satoshi’s coins are worth around $100 billion, with another $500 billion laying around in quantum vulnerable addresses today. We will cover various Q-day scenarios in the third and final installment of this piece, but I want to briefly touch on why the existence of Bitcoin is indeed an accelerant to quantum computing development. Let’s consider it from the perspective of four types of entities who get their hands on a CRQC: lawful evil, chaotic evil, lawful good, and chaotic neutral. I want to use this analytic frame because I often encounter people who tell me that no one would steal any Bitcoin because the consequences would be so dire – Bitcoin would collapse, they would go to jail, etc. But I can think of a few different threat models whereby Bitcoin is nevertheless vulnerable.9

Neutral evil: this is quantum from North Korea’s perspective. They like to steal crypto, not because they have any ideological issue with the US but simply because it pays the bills. In fact, they probably want crypto to keep existing, because stealing it is a good business for them. If a CRQC became sufficiently cheap and easy to create, it’s not impossible to imagine that pariah states like North Korea might get their hands on the technology. Personally, I would surmise that once a method to create a CRQC is established and known, it will be much harder to enforce non-proliferation than it has been with nuclear power, for instance.

So let’s suppose that in the not too distant future the method for creating a CRQC is established and North Korea is able to get their hands on the technology. I would imagine that they would try to monetize it, not with an obvious theft (like stealing Satoshi’s old coins) but simply by using quantum to exploit funds from an exchange coldwallet (with an exposed pubkey), and pretend that it’s a conventional hack. No exchange would realistically be able to tell the difference. (Although – any large or unexpected hacks from ~today forward will have people wondering if they are quantum in nature.) North Korea wants to keep their crypto franchise going so they won’t want to completely destroy the industry by tipping their hand. They also have absolutely no qualms about stealing Bitcoin from westerners. And the US can’t do anything in response because NK is already a pariah and has nuclear weapons. They are “neutral” in this example because they are basically economically motivated and we can model their behavior accordingly.

Chaotic evil: in Q world, this is China. China is the most likely candidate to develop a CRQC outside of the NSA or the American private sector. They have the talent, the capital, and a government that is spectacularly efficient when it comes to industrial policy. Given the immense strategic advantage that is afforded by being the first entity in possession of a CRQC, they have a strong incentive to conceal their progress.

China is chaotic evil when it comes to post-Q-day Bitcoin, because they have a well-established animus towards the system and an incentive to mess with the network. Bitcoin is now associated with the US, thanks to the Trump administration’s embrace of the network and the fact that a majority of coins are held by US firms, individuals, ETFs, and so on. And since China banned Bitcoin in 2021, while some Chinese firms and individuals presumably still hold it, presumably less Chinese wealth is in the coin. Not to mention that the Chinese government tends to be rather callous about destroying household wealth when its strategic needs dictate. The CCP also doesn’t care about decentralization or Bitcoin and is instead more keen to push their own CBDC or digital yuan. So China has relatively little to lose from disrupting Bitcoin, and a fair amount to gain – embarrassing the US and directly harming us economically. With the US holding a (likely growing) strategic reserve of Bitcoin, even if China can’t touch the reserve directly, they can still affect its value by shaking confidence in the Bitcoin network. It would be like reaching into Fort Knox and stealing some of the gold bars. If relations further deteriorate between the US and China, why wouldn’t they consider this option in their economic warfare toolkit?

For this reason, China gaining first access to a CRQC is what scares me most. They have the clear motive, potentially the means, and the opportunity to weaponize a CRQC against the Bitcoin network and by extension the US. Unlike North Korea, they might not seek to monetize their exploit, but instead aim to cause maximum chaos. All they would have to do is steal a couple of Satoshi’s old 50 BTC wallets (or the Binance coldwallet) and people would start to panic. If Q-day came abruptly, before any mitigation or migration plan was in place, with no shared agreement over what we should do about Q-vulnerable coins, China could exploit this to cause genuine network-destroying chaos. Bitcoin might still exist after a rushed and messy migration to post-quantum signatures, but the notion of surrendering 1-5m coins to the CCP might simply cause people to abandon the network in disgust. This possibility is genuinely existential and what motivated me to write this article in the first place. It worries me more than anything because China is indeed likely to obtain a CRQC (if the technology permits it) at some point. Even if they get it second, and Bitcoin isn’t prepared, they can still wreak havoc.

Chaotic neutral: Chaotic neutral is simply a law-abiding, private sector firm in the West. In terms of probabilities, assuming a CRQC is created, I think this is one of the most likely. I would wager that the first entity to produce a real CRQC would be a western (European or American) private company. Billion-dollar fundraises are not unusual in the quantum space these days. It is absolutely the case that in these fundraise discussions, the prospect of monetizing through Bitcoin is brought up. (It is generally whispered and hinted at rather than shouted from the rooftops.) Of course, outright theft would not be possible, but there is at a minimum a niche business to be had in mining for provably lost wallets. If that Welsh guy with the hard drive in the landfill remembers his public key, he would be an ideal client. White hat hacking, basically. And they might hold out hope that the US government could give them a privateer-esque license to go around stealing back stolen coins held by North Korea or a treasure-hunting license to go find “disregarded” coins (i.e., Satoshi’s stash, or coins that haven’t moved in over a decade). Like a wayward billionaire with a submarine trawling the ocean floor for shipwrecked Spanish doubloons. It’s technically still “stealing” but from some merchant 500 years ago. Digging up Satoshi’s coins is only a difference in degree.

These companies are already interested in Bitcoin, which is why they mention it in their pitch decks and their CEOs attend Bitcoin meetups. It’s not a secret that they view Bitcoin as an obvious, near term, highly intelligible means to monetize their otherwise very abstract technology. My point is that there are plenty of ways law-bound western private firms could still “attack” Bitcoin and justify it morally and legally.

Lawful good: this is perhaps the best-case scenario in my mind (barring a highly proactive and orderly fork protecting quantum-vulnerable coins, and moving Bitcoin over to PQ signatures). The lawful good actor in Q world would simply be … the US government.

I know, I know.

In the strange fantasy I’ve concocted in my head, the US government comes to care very much about Bitcoin’s long-term prospects – maybe the strategic reserve has swollen in size and Treasury doesn’t want it to simply vanish. Maybe it emerges that Satoshi is American and it becomes a matter of national pride. Or maybe the government doesn’t want trillions in savings of ordinary Americans to be deleted overnight.

So let’s say the US government gets their hands on a CRQC, either because they created it in-house at the NSA,10 or some American startup wins the race. They know that they have a very short window until China steals or copies the technology and builds one too.

Recall that after America empirically demonstrated fission in 1945 they only had 4 short years until the Soviets built a working bomb of their own. (The US assumed they would be the sole nuclear power for at least a decade, but Soviet espionage ensured that they were a fast follower.) The thing about scientific discoveries is that replicating them once the method is established tends to be much easier than figuring out how to do it in the first place. For instance, Germany spent all their time going down a heavy water dead end (you need tons of heavy water to make enriched plutonium and they could only make it at one plant in Norway, which got sabotaged). The US went the graphite route to enrich uranium, which Germany had discarded because they thought it was a dead end. In reality, Germany was using insufficiently pure graphite which tricked them into thinking it didn’t work. Had they known what Fermi and Szilard figured out – that boron impurities were ruining the refinement process – they could have built a bomb in time, too.

So I’m assuming that if the US builds a CRQC first, China will almost immediately know how to do it, either through industrial espionage, or simply reading press releases and figuring out the right modality and set of tradeoffs. At that point, it will be a matter of months until they are at parity.

So what does the US government do, fearful of China developing a CRQC and wreaking havoc on Bitcoin (among other things)? They authorize a private sector firm to pre-emptively “requisition” the quantum-vulnerable Bitcoin. (Let’s say that there’s only 1-2 million quantum vulnerable coins left at this point, because the US has informed all the Bitcoiners of the risk and everyone else has moved over to PQ addresses). They could do this by having the Attorney General provide a no-action letter to the quantum firm in question, allowing them to keep some percentage as a finder’s fee. The remainder of the Bitcoin (basically, Satoshi’s coins) would go right into the Bitcoin Reserve, where they would presumably be safe. The government could always maintain that if Satoshi returned and proved their identity beyond a shadow of a doubt they could rightfully claim their coins.

Or the government could simply requisition the coins directly, “for safe-keeping” of course. I think virtually all Bitcoiners would rather that the US government obtain the 1-2m BTC and commit to stewarding them responsibly as opposed to North Korea or China.

So depending on who obtains a CRQC first – a private sector entity, the US government, or a hostile foreign government – I think you can make the case that a huge tranche of coins would be vulnerable no matter what. For this reason, we should prepare for either theft by a hostile entity or a nevertheless unsettling seizure by a benign entity. No matter what, when Q-day arrives, some of the coins are going missing. Unless we do something first.

8. Quantum is a race with geopolitical stakes like AGI

Much like AGI or superintelligence, CRQCs are of paramount geopolitical importance. In fact, they matter more to governments than anyone else. The first government to get access to a CRQC will be able to break (non-upgraded) TLS/HTTPS, VPNs, un-migrated industrial control systems, and decrypt harvested traffic. Certain systems have hardcoded cryptography and can’t easily be upgraded so will be vulnerable. Think industrial robots, power grid controllers, medical implants, and elevator controls. Devices with burned root keys like routers, modems, set top boxes and so on cannot be upgraded and would also be vulnerable. Now consider long-lived satellites already in orbit. They can’t receive firmware updates to change their crypto systems either. A quantum attacker could impersonate ground control and commandeer them.

While many systems will eventually be migrated to PQ crypto, the world for decades will be populated with legacy connected systems that are nevertheless unable to upgrade and hence vulnerable to takeover. Any government in possession of a CRQC could wreak havoc for a while.

They will most likely be able to make rapid progress in physics research, with likely advancements in chemistry and materials science.

This complicates things for us crypto people. First of all, if quantum starts to look at all realistic, governments will intensify their efforts to be first. Bonus if they can be first while others don’t think they have the capability yet. Once everyone has QCs, presumably the entire world will upgrade to post-quantum cryptography to the extent they are able, and communications infrastructure will be re-encrypted. But if just one government has a QC, and they don’t tell anyone about it, they will have a window of opportunity to read virtually all classically-encrypted but quantum vulnerable traffic. Think: locations of nuclear submarines, military readiness information, knowledge of what satellites are looking at, classified fighter jet schematics, frontier AI model weights, theft of high-value corporate IP, and so on.

Governments are also harvesting encrypted data from their adversaries today, with the expectation that they could decrypt it in the future. So even if future governments get smart and start to use PQ cryptography to encrypt sensitive communications, today’s governments are probably still leaking information.

It’s not inconceivable to me that China or the US or both launch a kind of quantum Manhattan project if it becomes more and more evident that a CRQC will exist. Just recently the White House announced the Genesis Mission, “a dedicated, coordinated national effort to unleash a new age of AI‑accelerated innovation and discovery that can solve the most challenging problems of this century,” mentioning quantum as well. China could and probably has already accelerated QC research with little fanfare.

As with nuclear fission, timelines can compress quickly once a technological development is known to be theoretically possible. It was only in 1938 that nuclear fission was discovered experimentally and then theoretically explained. Less than 7 years later, a working prototype was detonated in New Mexico. In my view, the collective discovery that error correction can actually work is a catalyst of a similar magnitude. Many experts thought quantum computing was a dead end for decades because errors in quantum system tended to be introduced faster than they could be corrected. Each additional qubit made the system worse, not better. It was only in the last year or so that researchers proved they could add qubits and reduce the net error in the system, creating the conditions for scale. Quantum supremacy had never realistically been demonstrated until Google’s 2019 demo or Quantinuum’s 2023/24 demos.

In my view, breakthroughs in error correction and gate fidelity are the proof points that should cause the expert consensus to be revised from “potentially impossible” to “likely and practical”. I expect that this will drive governments to dedicate significantly more resources to the task, further shortening timelines.

9. AI could accelerate the pace of quantum development

And of course, none of this is happening in isolation. It’s interesting to me that quantum is finally “happening” at around the same time as AI, and it’s indeed possible that 2027 ends up being the most interesting year in history, as the year in which we get both AGI and a CRQC.

AI is relevant to quantum in quite a few ways, and I view it as a potentially meaningful accelerant.

First of all, AI has trained investors to extrapolate exponential trends and consider what the world looks like a few short orders of magnitude away. If you were thinking in exponentials in 2019 or 2020, you realized that we could simply throw more data at these models and we could reliably get more and more intelligence. If you monetized this insight by going long OpenAI or NVIDIA you made a killing. Most investors were not thinking this way, but today they have been conditioned to reason like this. AI also taught us that capital can create massive speedups in an already exponential trend. Technology doesn’t progress in a vacuum. A decade ago, it would have been unthinkable to most that AI models would be passing the Turing test with perfect ease, that AI datacenter buildout would have eclipsed the telecom boom as a share of GDP, or that GDP growth would actually be largely driven by AI CAPEX spending. But the emerging utility of AI completely redirected economy-wide capital flows. Similarly, if we attain meaningful quantum supremacy in scientific and commercial applications (possible with 100s of logical qubits), investors will re-rate the odds of a utility-scale quantum computer, and accordingly pour more cash into the asset class. This dynamic of forward-looking exponential expectations which has now been hammered into investors’ heads by AI should carry over to quantum. Or as Scott Aaronson says, “Nowadays, of course, pessimism about technological progress seems hard to square with the revolution that’s happening in AI, another field that spent decades being ridiculed for unfulfilled promises and that’s now fulfilling the promises.”

Second, AI has already begun to accelerate scientific progress by assisting with mathematical discoveries. While AI hasn’t solved any major unsolved problems in math like the Millenium Prize Problems yet, mathematicians have started to productively use AI to devise new conjectures about π and e and find novel results in knot theory, combinatorics, and Ramanujan Machines.

And for QC specifically, AI has begun to pay dividends. Google’s quantum unit was even rebranded as “Google Quantum AI” to acknowledge the important role of AI in designing QC. Google used ML and RL for error correction in their landmark Willow processor. In the last couple years a vast literature has emerged employing neural networks to assist in quantum error correction.11 It is evident that advances in machine learning and AI are accelerating quantum development, although the magnitude of this effect is hard to determine.

10. Credible people have revised their quantum timelines

This one is tenth because this is the scientific equivalent of hearsay, but I still find it notable that quite a few quantum luminaries have become more excited about quantum in the last year or so.

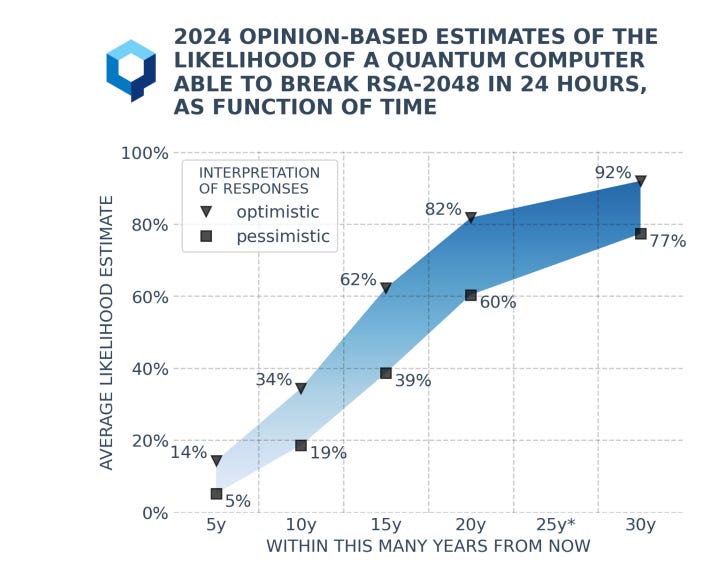

It’s worth prefacing that expert consensus, to the extent it exists, is a markedly longer timeline than I am anticipating in this article. The best survey I can find of quantum experts is the Global Risk Institute’s Quantum Threat Timeline, which surveys a number of quantum experts every year. For some reason, they didn’t do it in 2025, but their 2024 edition has surveyed experts placing a 50% likelihood of an RSA break in 2040.12

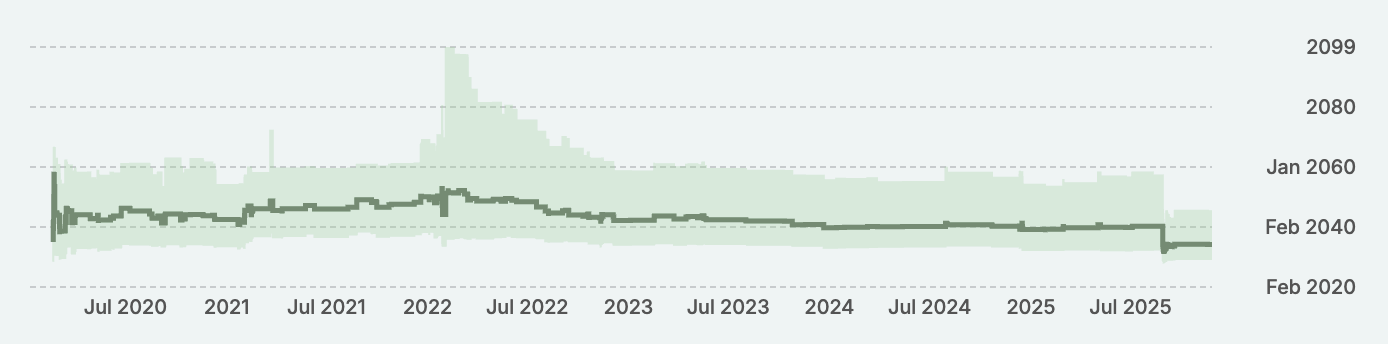

The forecasting site Metaculus, which aggregates expert consensus, currently lists the mean timeline for a quantum RSA break at 2034. This has actually come down significantly from just a couple years ago when the mean timeline was in the 2050s.

So the experts are still fairly skeptical about a near-term quantum break.

But expert consensus is sometimes wrong.

The person who may have done the most to revise quantum timelines earlier this year is Scott Aaronson, a very well-known professor and theoretical computer scientist. In preparation for this blog post I read his book “Quantum Computing Since Democritus” and I will say that while I enjoyed the philosophical digressions I wouldn’t recommend it unless you have a strong math background. Aaronson is specifically known for being a quantum computing skeptic of sorts; though it’s the subject he’s best known for, he is also vocally skeptical of the hyperbolic claims that tend to characterize firms in the quantum space. So reading his blog entries this year I was extremely surprised to see that he had meaningfully revised his view of QC’s viability. In September he wrote:

I started in quantum computing around 1998, which is not quite as long as some people here, but which does cover most of the time since Shor’s algorithm and the rest were discovered. So I can say: this past year or two is the first time I’ve felt like the race to build a scalable fault-tolerant quantum computer is actually underway. Like people are no longer merely giving talks about the race or warming up for the race, but running the race.

And

I’m now more optimistic than I’ve ever been that, if things continue at the current rate, either there are useful fault-tolerant QCs in the next decade, or else something surprising happens to stop that.

As far as “why now,” he refers to 2-qubit gate fidelities, reminding us that at 99.99% accuracy, we can fix errors faster than they are introduced. At the start of his career we were in the 50% range, but in the last year some firms have demonstrated 99.9%.13

This is the exact same answer I got from a physics PHD who works in quantum when I interviewed him for this article. “Error correction is everything,” he told me. We are making progress, and people are starting to notice.

Back to Aaronson. Regarding ECC and RSA, he says:

To any of you who are worried about post-quantum cryptography—by now I’m so used to delivering a message of, maybe, eventually, someone will need to start thinking about migrating from RSA and Diffie-Hellman and elliptic curve crypto to lattice-based crypto, or other systems that could plausibly withstand quantum attack. I think today that message needs to change. I think today the message needs to be: yes, unequivocally, worry about this now. Have a plan.

As I was in the middle of writing this article, Aaronson surprised me by publishing a blog post with an even more ebullient prediction.

Evidence continues to pile up that we are not living in the universe of Gil Kalai and the other quantum computing skeptics. Indeed, given the current staggering rate of hardware progress, I now think it’s a live possibility that we’ll have a fault-tolerant quantum computer running Shor’s algorithm before the next US presidential election.

This was widely reported on, and seemingly influenced Ethereum’s Vitalik Buterin, who tweeted a few days later:

I think I sorta forgot elliptic curves exist over the last year.

(obviously privacy protocols still need them until we figure out proof aggregation, but in general they’re on their way out because looming quantum risk)”

And then he followed up by flatly stating “elliptic curves are going to die” at Devconnect.

Aaronson’s comments caused such an uproar that he was forced to write a post begging people to stop getting so excited about it. But even in that attempt to quell the excitement, he reinforced the danger Bitcoin faces:

[I]f you think Bitcoin, and SSL, and all the other protocols based on Shor-breakable cryptography, are almost certainly safe for the next 5 years … then I submit that your confidence is also unwarranted. Your confidence might then be like most physicists’ confidence in 1938 that nuclear weapons were decades away, or like my own confidence in 2015 that an AI able to pass a reasonable Turing Test was decades away.

Elsewhere, while you have Jensen Huang predicting useful quantum is two decades out, the founder of Google Quantum AI Hartmut Neven said in February: “We’re optimistic that within five years we’ll see real-world applications that are possible only on quantum computers.” In October, Google arguably demonstrated quantum advantage14 for the very first time on their Willow processor. I could cite the CEOs of all the quantum companies, but they have a financial incentive to overhype their capabilities, so that won’t be very persuasive.

One particularly detailed case of a revised timeline is Marin Ivezic’s June 2025 analysis in which he accelerates his Q-day timeline from 2032 to 2030. He cites three developments this year which shortened his timelines: IBM’s 2025 timeline aiming for 200 logical qubits by 2029; the May 2025 Gidney paper15 showing that perhaps 1000 logical qubits might be sufficient to factor RSA2048; and Oxford’s result demonstrating 10-7 gate error.

Put those three facts together and my old 2032 Q-Day estimate is essentially baked in even if the field made zero further scientific breakthroughs after June 2025 and simply executed on what has just been published. Realistically, though, we’ll keep squeezing qubit overhead with better error-correction codes, smarter factoring circuits, and faster hardware integration. That steady drumbeat of incremental wins makes me believe that I should bring the estimate closer to 2030.

This entry is tenth on my list because it’s just a bunch of people saying things, while expert consensus remains fairly adamant that a CRQC is a 2035-2040+ phenomenon. However, the dissenters are notable enough to warrant mentioning.

What should Bitcoiners do today?

Let’s say you’re persuaded by all the above, and you’re more than a bit alarmed. I will cover all the proposed remedies to a CRQC and more detailed effects on various blockchains in my third installment in the series. But what should Bitcoiners do about the problem right now?

As we’ve established, CRQC destroys a key assumption that Bitcoin is based on – the notion that knowledge of a private key is tantamount to ownership. Post Q-day, mere knowledge of the private key is no longer sufficient to establish someone as the rightful owner of a given UTXO.

The first major risk is to quantum-vulnerable bitcoin in the three exposed formats: p2pk, p2ms, and p2tr. Unless you’re Satoshi, you probably haven’t used p2pk outputs. P2ms is an obsolete multisig format that only secures 57 BTC (multisig today is done with p2sh, p2wsh, or Taproot). Weirdly, Taproot addresses (“bc1p…”) are more exposed to quantum risk than other contemporary address types, since they can expose a public key in the output.16 You perhaps can’t fault the developers behind the Taproot upgrade because they may not have been thinking about quantum risk back in 2018 when they first proposed it. But the grim truth is, if you are worried about a quantum breach, you should regress to using only hashed address formats like p2pkh (“1…”), p2wpkh (“bc1q…”), or p2sh (“3…”).

These are safe on Q-day IF you have not previously spent from those addresses. But even for hashed addresses, the moment you broadcast a transaction from one of those addresses, you expose your public key to an attacker. So pragmatically, what you should do is never reuse addresses, and if you do spend from an address, don’t leave any change or residual funds there. Wherever your funds are at rest, they must be in an address that has never broadcast an outgoing spend. And this is already established wisdom, but don’t leak your xpub anywhere online – it allows a quantum attacker to view all public keys connected to that master key.

Alternatively, if you don’t trust yourself to maintain good wallet hygiene, you could send your coins to a custodian that credibly signals a state of quantum readiness. At the moment, few if any custodians have signaled that they are taking active measures to quantum-proof their coins, but one assumes they will as Q-day approaches. Not to mention that custodians will be bigger honeypots for quantum-enabled hackers. And truthfully, until Bitcoin adopts a post-quantum signature scheme at the protocol level, any coins in transit and many coins at rest will be vulnerable.

Realistically, beyond making sure your coins are sitting in non-reused, hashed addresses, ordinary Bitcoiners have little to do ahead of Q-day. Instead, you should engage in “watchful waiting” – mindful of the threat, but not overly concerned unless something changes. Informationally, a useful tool would be a set of prediction markets organized like options strikes going out to 2035. The prediction markets could be structured as follows:

[x] bits of ECC broken by a quantum computer by [date]So far, researchers have broken 6-bit elliptic curve keys using QCs. The pace of breakage and the future risk implied by the prediction market will allow us to both better assess when Q-day might arrive (informing the urgency of making protocol-level changes), and also to hedge our Bitcoin exposure. I expect the 256-bit markets for 2027 and onwards will be the most liquid, and if you are concerned about your Bitcoin position losing value because of uncertainty surrounding a quantum break, especially an “early break” before Bitcoin has adapted, you could go long Yes on the prediction markets. Think about it like catastrophe insurance or long-dated out of the money SPY puts. Since the market implied odds ought to be fairly low (if I had to guess, I think the market will price a clean break by 2030 at around 5%), the cost of insurance should be low as well. All of this presumes sufficient liquidity. And Polymarket will have to find a way to deliver your coins post-quantum break, which might be easier said than done.

Even though you may be a little worried reading this, I am not panicking. I think the best way to deal with an uncertain future is to embrace unpleasant truths, rather than believe pleasant fantasies. Let’s say quantum happens – sometime in the next 5, 10 or 15 years. It’s better to acknowledge that it’s a problem today and get started on the debates that matter, so that we aren’t caught by surprise on Q-day:

What do we do about Satoshi’s and other abandoned, vulnerable coins?

What kind of protocol level change to Bitcoin should we make and how?

What sort of post-quantum cryptography should we adopt?

When should we initiate the migration process?

How long do we realistically have?

That’s the point of this series. Not to spread panic, but to galvanize action so we can tackle this future problem in an orderly manner rather than dealing with forks, theft, or chain halts down the road.

Update 11/26/25: updated language around a potential fork to render vulnerable p2pk coins unspendable. Such a fork would be a soft fork, since it tightens protocol rules

Actually, some people do. There’s a lot of very smart people that are 100% focused on preventing the emergence of a misaligned AI, stopping AI development altogether, or researching AI safety.

It bears repeating that I have no formal math training beyond some graduate statistics/econometrics

Yes the title of this piece is a Jason Bourne reference. I love that series

Q-Day is the day that a CRQC is created. It’s not “the day that quantum becomes useful”. It’s the day that a QC capable of breaking ECC/RSA emerges

For a more rigorous treatment of this section, I recommend Chaincode’s report from May of this year

There is a cryptographic algorithm called Grover’s which gives you a quadratic speedup on a QC for a brute force search like SHA256, but that only reduces the search time by the square root of the search space

See section 6 in this article for a more thorough explanation

This is where Bitcoin came from anyway, so it would be poetic if it happened this way

See for instance Bausch et al 2024

RSA2048 and ECC256 are in the same order of magnitude difficulty. Before 2025, RSA was thought to require around 6k logical qubits to ECC’s 2k. But a new result brought RSA factorization down to around 1k qubits.

Just recently, Quantinuum demonstrated 98 qubits with 99.92% fidelity with their Helios processor. IonQ announced a 2-qubit gate in a smaller system with 99.99% fidelity.

Running a computation on a quantum computer that is faster (13,000x in this case) than it would be on a classical model

Taproot was a mistake.

Thanks Nic for rounding out my list of untouchable Thanksgiving Day conversations. Quantum, Politics, Religion, A.I., and Crypto. My in laws won't know what hit them.

This is sensational stuff, I don’t think someone has bought together the various parallels of thoughts re QC and cryptography and BTC.